Enhancing evidence-informed interventions

Published on 06 April 2023

Overview

Insights generated from on-the-ground data on development interventions supported by UNDP and its partners facilitate learning and cross-learning on what works, where, why, and for whom.

Bridging knowledge gaps with robust data and methods

"When you can measure what you are speaking about, and express it in numbers, you know something about it."

—Lord Kelvin

Quality data is at the heart of evidence-informed decision-making, and its absence hinders the ability to discern what works in complex settings. High-quality data, though, does not constitute evidence unless coupled with rigorous methods.

By combining traditional and non-traditional data sources with robust causal analytical methods, it becomes possible to:

- Understand how much each dollar invested returns as a benefit to communities/environment, and

- Shift conventional wisdom and reorient investments towards more effective interventions.

Learning what works on the ground contributes to the identification of solutions that are both viable and scalable in different contexts.

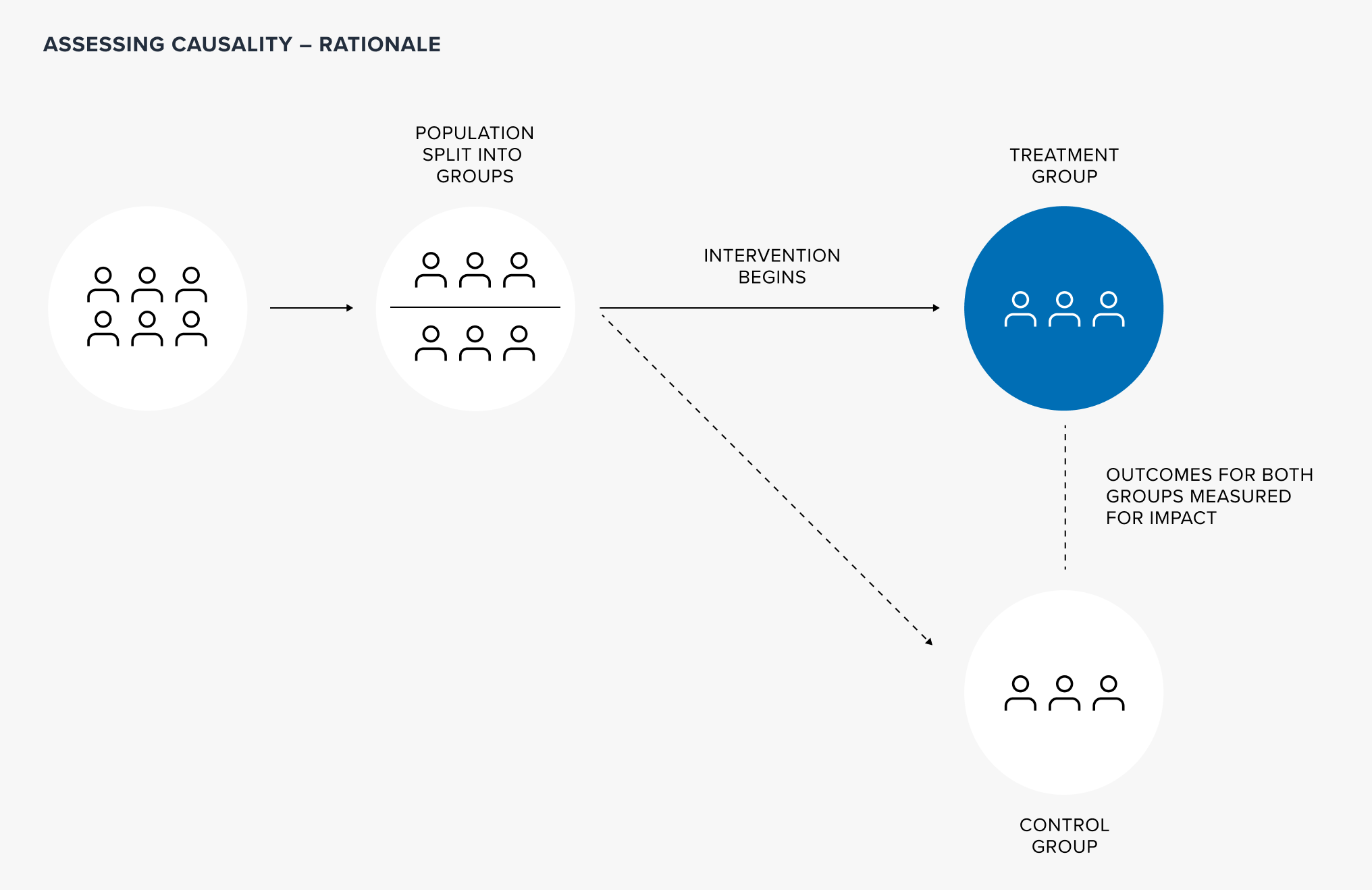

UNDP makes use of different scientific methods to measure impact. Experimental and quasi-experimental are the most prominent designs, relying on control and treatment groups. The control group (e.g. benchmark, not exposed to the intervention) acts as a counterfactual¹, which allows for a valid comparison of similar groups at the same point in time. This approach enables attribution of impact to the intervention being tested and ensures that confounding factors, such as age, gender and educational level of beneficiaries, are not responsible for the result.

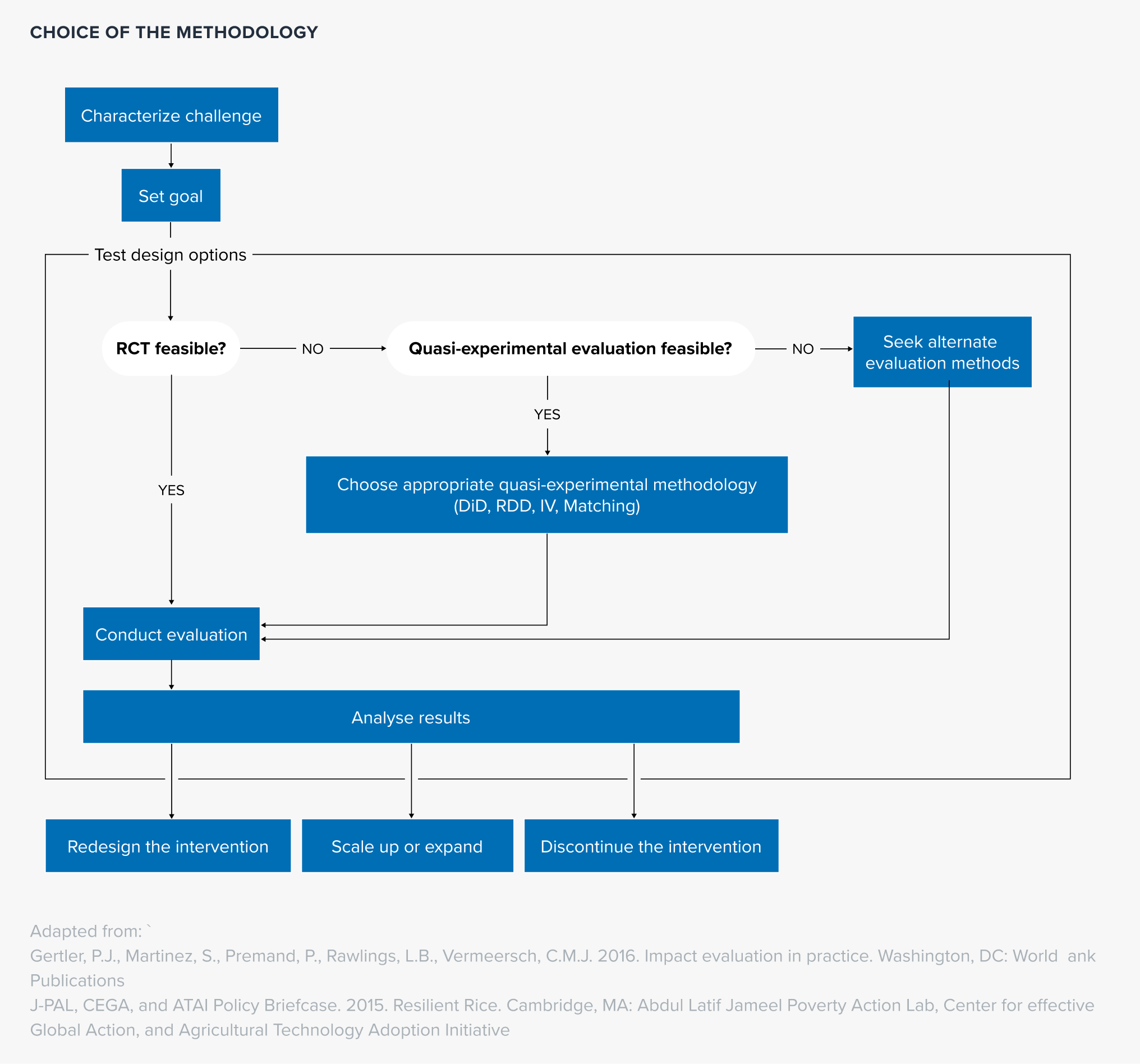

In an experimental design, the selection of control and treatment groups is random² and integrated at the inception of an intervention. When this random selection is not possible, programme managers and policymakers can turn to quasi-experimental methods – such as regression discontinuity design, instrumental variables, difference-in-difference and matching.

To backstop these designs, the availability of quality, accurate and frequent data is critical. While new technologies (such as remote-sensing and web scraping) coupled with increased computing power create new opportunities for gathering and analysing data, traditional household surveys and administrative data still play a crucial role in measuring policy and intervention outcomes.

Beyond the co-production of scientific knowledge - that strengthens country engagement and local capacities -, results from causal assessments can be incorporated in programming, enabling programme managers and policymakers to plan interventions in a rational and evidence-based manner.

References

[1] A counterfactual is what would have happened had a given intervention not been undertaken

[2] Specifically, randomization means that anyone of an equivalent group could have been allocated to control or treatment. This allows for valid statistical inference on the causal effect of a given intervention.

The M-CLIMES Project has worked in food-insecure districts in Malawi to modernized climate information and early warning systems. There is evidence that the project increased crop yields by 60% and had a positive impact concerning adaptation capacity of farmers at risk of climate change.

Leveraging public goods with strong core evidence

Since 2018, UNDP has been collecting tailored evidence on effective development interventions in 12 countries. These assessments involve theory-based designs and the collection of unique data on the interventions, a process accompanied by capacity-building activities. Over 19,000 households were interviewed, gathering data on more than 400 metrics related to socioeconomic factors, farming practices, climate adaptation, and resilience, among others. Scaling up the evidence-based initiatives within the organization could mean expanding global knowledge by providing public goods on the effectiveness of development interventions, capitalizing on the organization's presence in 170 countries and territories.

To date, assessments in Bangladesh, Malawi and Cambodia have been successfully completed, while the evaluations in the remaining countries are actively in progress.

Click on a country to view its assessment project page

Please note: The designations employed and the presentation of material on this map do not imply the expression of any opinion whatsoever on the part of the Secretariat of the United Nations or UNDP concerning the legal status of any country, territory, city or area or its authorities, or concerning the delimitation of its frontiers or boundaries.

Stock of knowledge

Increasing the number of rigorous assessments based on on-the-ground data collection can enhance our understanding of 'what works,' for whom, and the underlying mechanisms ('why' and 'how').

Currently, robust measurements of intervention effectiveness, especially in low and middle-income countries, are scarce. New technologies play a crucial role in addressing this evidence gap by identifying interventions already assessed and extracting valuable insights. By leveraging AI to categorize assessments conducted globally over the past 20 years across UNDP’s signature solutions, the graph below reveals a growing trend in the use of impact evaluations. However, it is worth noting that most studies still focus on specific intervention types, such as health.

For details on the methodology, click here

Country cases

Explore the details below.